Workflow

Suzannease Interpretation of the BHL workflow and the requirements to get this darn thing done.

(comments by EC and BS from NHM in Times New Roman)

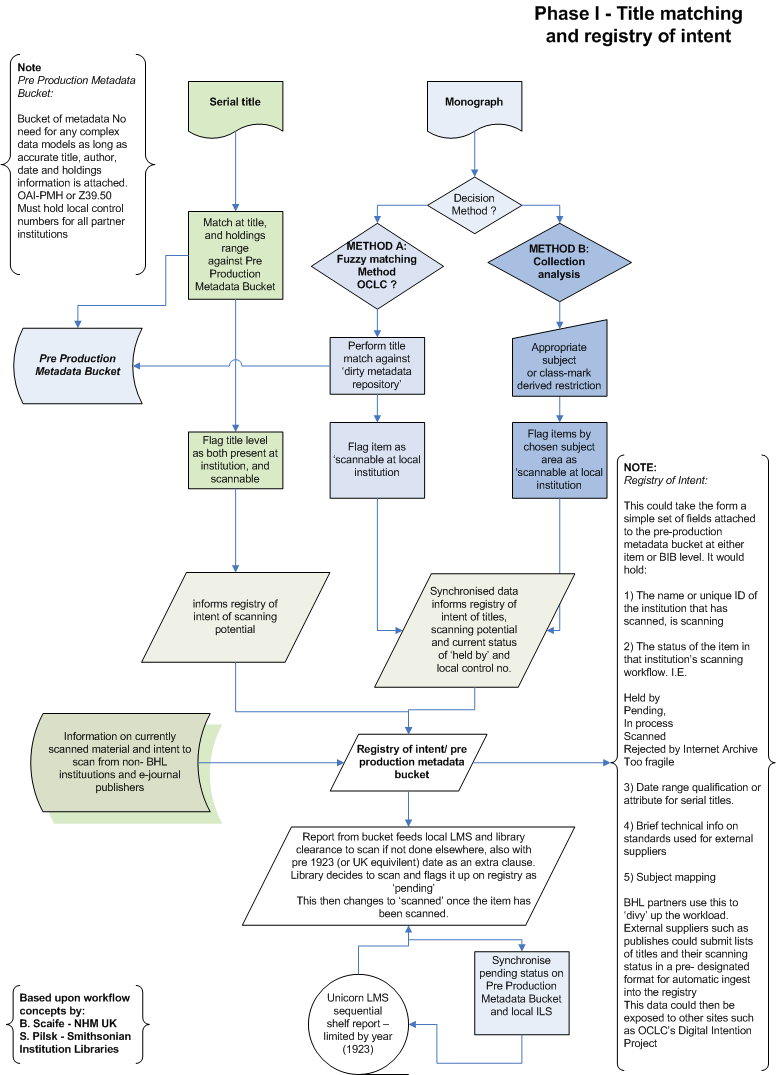

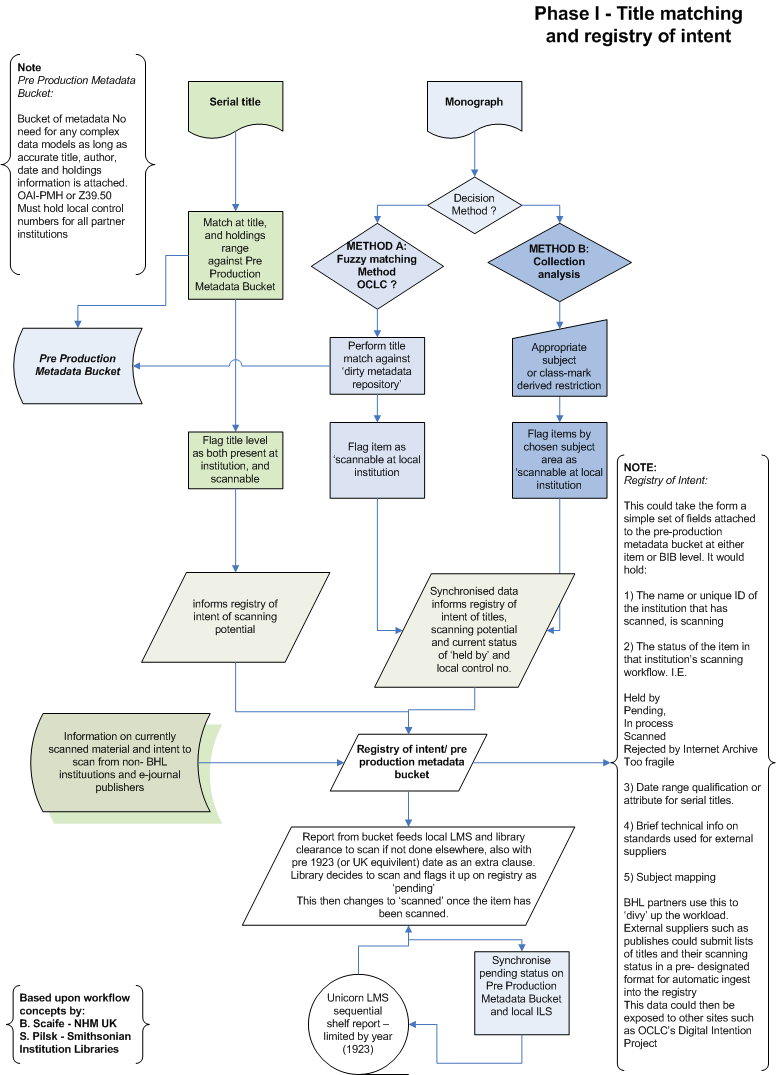

Phase I. Material Selected to be Brought To the Scanner

• Someone call’s someone and chooses the subject area to scan. Scanning begins

1. Bookpuller Technician insures barcode on item is linked to bib record in the institution’s ILS or in the Bucket of Metadata

a. The Bucket of Metadata is a dirty bucket of whatever we have pulled together and mashed up. This could be at OCLC. It could be on a hardrive somewhere in India. The right data needs to be exposed in a way to insure proper matching.

b. The individual ILS will need to have the data exposed so that the barcode number is fetchable by Z39.50 or some other fool proof way.

c. The Bucket of Metadata or ILS’s will need to provide circulation to indicate that the title is at the scanner or returned to some location.

d. Bucket or ILS’s need to handle data coming back from scanning stations regarding reject and other notes. Including Alerts that steps have been taken for tracking purposes.

EC @ NHM:

Bucket of metadata could form a simple database (or even XML stack?), no need for any complex data models as long as accurate title, author, date and holdings information is attached. OAI-PMH or Z39.50 Could retrieve this information from partners. This could be created by OCLC from their combined catalogue dump. Alternatively, the NHM will soon gain source-code for a SQL Server/ JAVA based joint catalogue that can perform automatic harvests of sites via Z39.50 and automatically assemble a Z39.50 enabled joint catalogue, as part of the Linnaeus Link project. If scalable enough, this could potentially form a platform for development.

a) The dirty Bucket of Metadata could also form the basis of the Registry of Intent, for both BHL members and external suppliers. This could take the form a simple set of fields attached to the pre-production metadata bucket at either item or BIB level. It would hold:

1) The name or unique ID of the institution that has scanned, is scanning

2) The status of the item in that institution’s scanning workflow. I.E.

Held by, Pending, In process, Scanned, Rejected by Internet Archive, Too fragile

3) Date range qualification or attribute for serial titles.

BHL partners use this to ‘divy’ up the workload. External suppliers such as publishes could submit lists of titles and their scanning status in a pre- designated format for automatic ingest into the registry. As BHL partners will be needing to co-ordinate to ensure they do not scan the same large run of journals, this kind of functionality will be required here anyway. It then makes sense to me to start involving publishers etc at an earlier stage, not

2. Scanner wands in barcode and pulls up proper metadata record at title level

a. This follows the current model at IA

b. Scanner needs to have a “reject” workflow indicating in the Metadata Repository that the title was rejected and make some notes as to why.

a. Rejection might be that the title is “already at a scanner” or “scanned”

b. Scanner needs to make any other indication of problems or notes – missing pages, missing volumes, fold outs that are different size

c. Any other notes from scanner that might be needed for other processes

d. Circulations issues need to be address with the scanner “checking out” the title and “returning” when complete.

EC @ NHM:

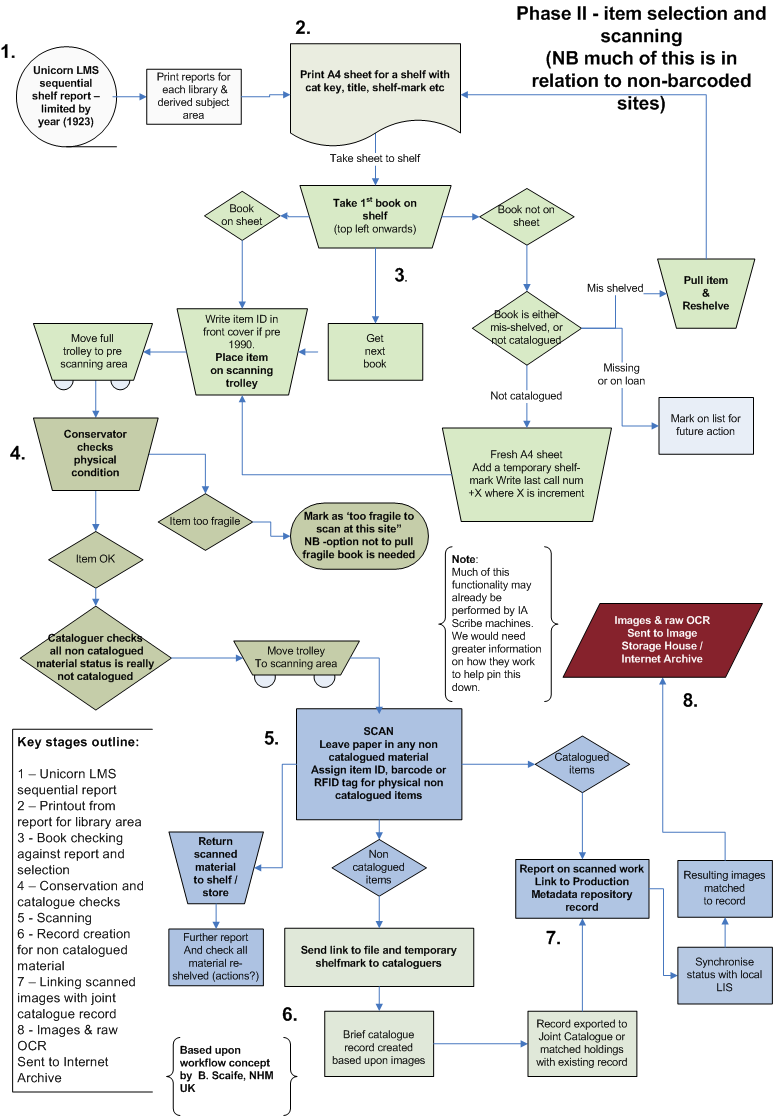

Most of this is dependent on items already barcoded with complete MARC records in a local LMS. Stock at the NHM is not currently barcoded, and this may be the case for some other sites. We are also unsure of the number of un-catalogued books

Some amendment to the workflow here will be necessary for us

(See attached workflow diagrams, stage 2

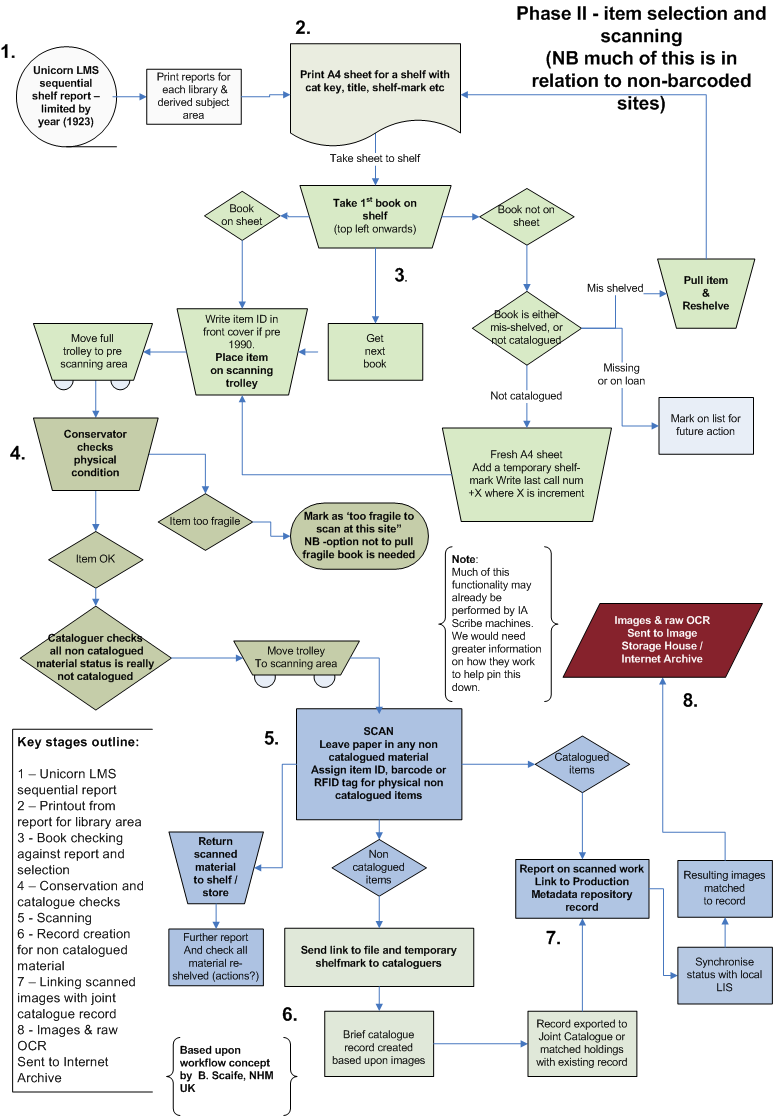

3. Scanning begins

4. Multivolume pieces of a title

a. At each change of physical piece, the scanner wands barcode.

b. Scanning software changes file name for next set of images

c. Magic happens that keeps the volumes together under the proper title level metadata but indicates volume changes.

EC @ NHM:

Good point. Would need to be built into the scanning software, or be expressed in the filename/ GUID of each scanned page. Internet Archive’s Scribe Machines may have this functionality already. Would need precise specs and workflow examples from IA.

5. Scanner creates images and sends to Image Storage House with a GUID

a. This could be Internet Archive:

1. Tiffs?

2. Jpeg 2000?

3. Other things?

b. If scanning has been done through a different workflow the deliverables need to be

1. images that can then be processed

2. metadata at title level in compatible format

3. file naming structure that can be used

4. files that fit the requirements of the steps below

5. all in formats that can be stored in the Image Storage House

6. metadata that can be ingested into the Intent to Scan/ Already has been scanned aspect of the Bucket of Metadata (dirty bucket)

7. Required metadata at the right levels for the clean New Production Level Metadata Bucket

8. Proper GUID assigned

9. Other things I forgot

EC @ NHM:

We all probably have a lot of ‘legacy’ material in various forms and quality. A brief spec certainly needs to be written, the above all seems quite sound. Information on ‘legacy’ scans of minimum required quality by partner institutions needs to also be recorded in the registry of intent.

c. Some sort of notification needs to happen that the scanning has been sent to the Image Storage House either from the scanner or from some other depositing mechanism. This alerts people to do “next steps” what ever that might be.

d. Quality control needs to fit in here and have some input, actions and results built into the workflow

EC @ NHM:

The scanning warehouse could itself provide daily automated reports on new items scanned to both scanning institutions and those involved in ‘post production enrichment’ (i.e. all the cool stuff!). This could also include a list of rejections (i.e., out of focus, corrupted file or whatever). Such a report would also be a useful management tool for those managing scanning ‘pods’. (Have I just said the same thing as Susanne?)

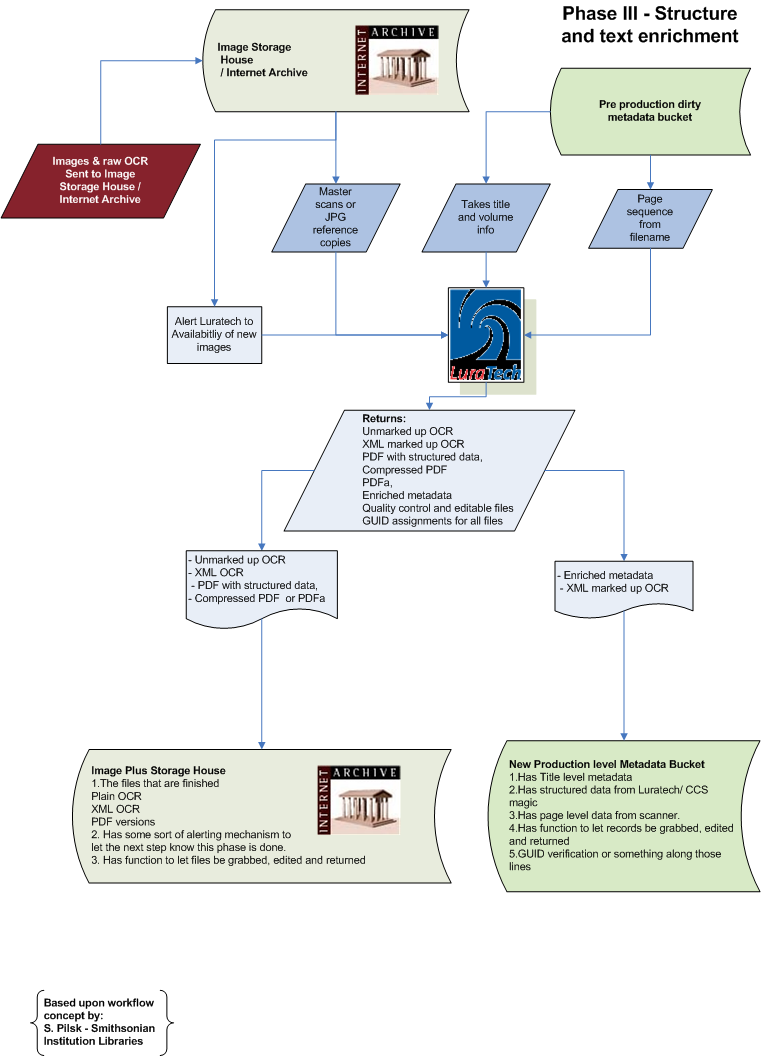

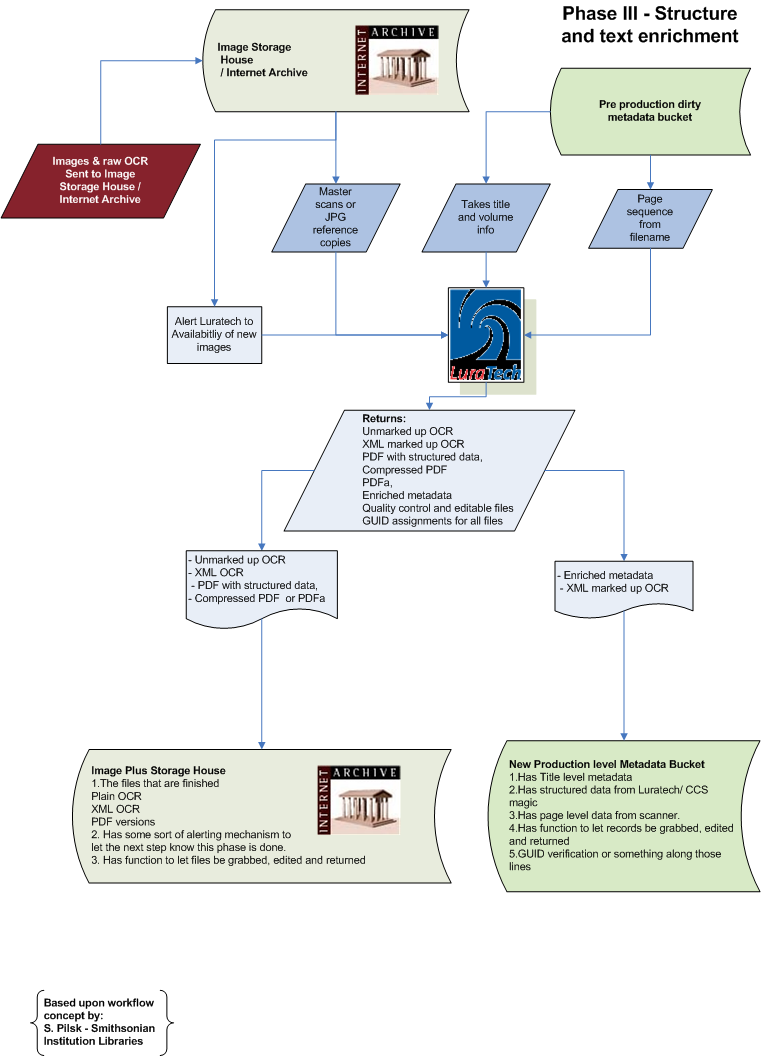

Phase II. Luratech/CCS whiz kids are told that Image Storage House now has image with title level metadata

A. Luratech/CCS fill in the requirements they need to have

1. Title level metadata is available

2. File naming structure from scanner indicates physical volume change

3. Page level information is available from the scanner that includes something or other.

EC @ NHM:

We clearly need to pin down the file-naming early on if scanning is going to commence before the ‘dirty bucket is in place’. The SEEP should help us iron out this kind of detail. This brings me back to the old argument of filenames versus metadata, but with this kind of workflow, I think clear filenaming conventions are a must, as all image/ page level metadata is going to be auto-generated at a much later stage than creation.

B. Luratech/CCS Loop

1. Take scans.

2. Do magic

3. Return OCR, XML marked up OCR, PDF with structured data, compressed PDF, PDFa, metadata for the New Production level Metadata Bucket

4. Returned files are stored in the Image Plus Storage House and / or New Production Level Metadata Bucket

5. Quality control and editable files

6. GUID assignments

EC @ NHM:

Sounds great!

C. New Production Level Metadata Bucket

1. Has Title level metadata

2. Has structured data from Luratech/ CCS magic

3. Has page level data from scanner.

4. Has function to let files be grabbed, edited and returned

5. GUID verification or something along those lines

EC @ NHM:

Sounds really great!

D. Image Plus Storage House

1. All the files that are finished

2. Has some sort of alerting mechanism to let the next step know this phase is done.

3. Has function to let files be grabbed, edited and returned

Sounds really great!

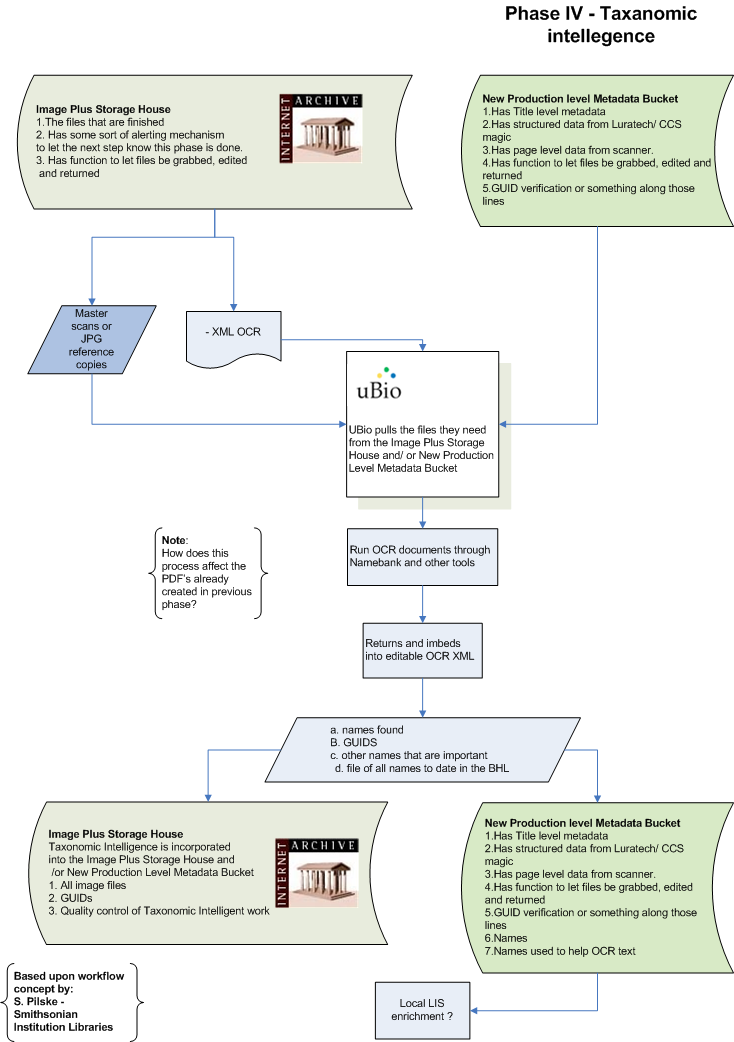

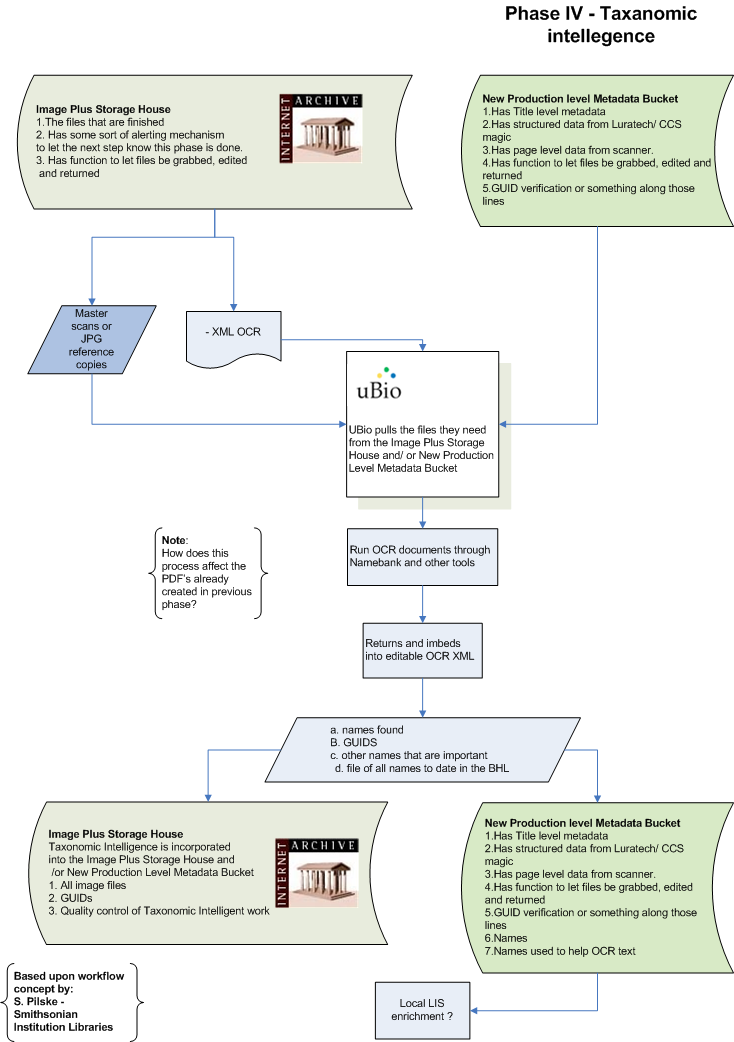

Phase III. Taxonomic Intelligent Tool is told that the Production Level Output is Available to be scanned for intelligent life

A. UBio whiz kids pull the files they need from the Image Plus Storage House and/ or New Production Level Metadata Bucket

1. Run OCR documents through Namebank and other tools

2. Returns and imbeds into editable OCR XML

a. names found

b. GUIDS

c. other names that are important

d. file of all names to date in the BHL

3. Taxonomic Intelligence is incorporated into the Image Plus Storage House and /or New Production Level Metadata Bucket

a. files

b. GUIDs

c. Names

d. Names used to help OCR text

e. Quality control of Taxonomic Intelligent work

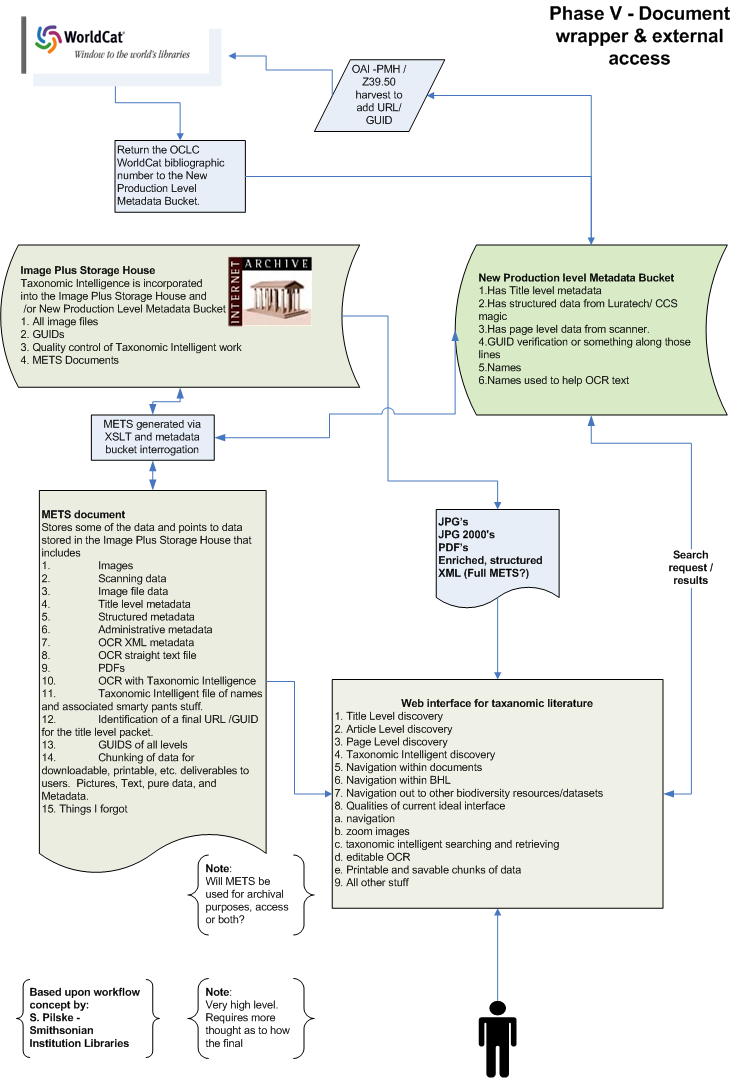

Phase IV. New Production Level Metadata Bucket wraps everything in a blanket/wrapper

A. METS document is created that stores some of the data and points to data stored in the Image Plus Storage House that includes

1. Images

2. Scanning data

3. Image file data

4. Title level metadata

5. Structured metadata

6. Administrative metadata

7. OCR XML metadata

8. OCR straight text file

9. PDFs

10. OCR with Taxonomic Intelligence

11. Taxonomic Intelligent file of names and associated smarty pants stuff.

12. Identification of a final URL /GUID for the title level packet.

13. GUIDS of all levels

14. Chunking of data for downloadable, printable, etc. deliverables to users. Pictures, Text, pure data, and Metadata.

15. Things I forgot

EC @ NHM:

This is going to be a big chunk of METS for even small documents. The California Digital library 7Train XSLT tools (

http://seventrain.sourceforge.net/7train_documentation.html) could form part of the SEEP as a tool to assemble METS form automatically generated component files.

We will need to pin down our use of the METS schema and register a BHL profile. As for things you forgot, would technical metadata on the images themselves in Z39.87 (NISO MIX) be something to consider? A lot of this overlaps with PREMIS.

B. Database structures are created if that is what needs to be created for displays, indexes, sorts, etc. The database tables are stored in the Image Plus House and/or New Production Level Metadata Bucket

EC @ NHM:

Some random thoughts on this:

• Will the database (clean production bucket) hold all of the information in the METS files? If this is the case, it may make sense to

• Alternatively, will it just hold the access points required for public access, and link back to the METS and have its application stacks parse information from the METS files to present to the user ‘on the fly’ as required? It just be a simple databased bibliographic record that draws all information from METS and pushes it to the user alongside images, via Chris Freeland’s interface or something similar.

• METS vs. Big Enterprise Relational Database (BERD) is an interesting problem. We need to decide what route to go down with regards to cost, development and sustainability We will need to work out exactly what can be database and what can be housed in XML.

• Is it too early to think this yet, or am I just wrong?

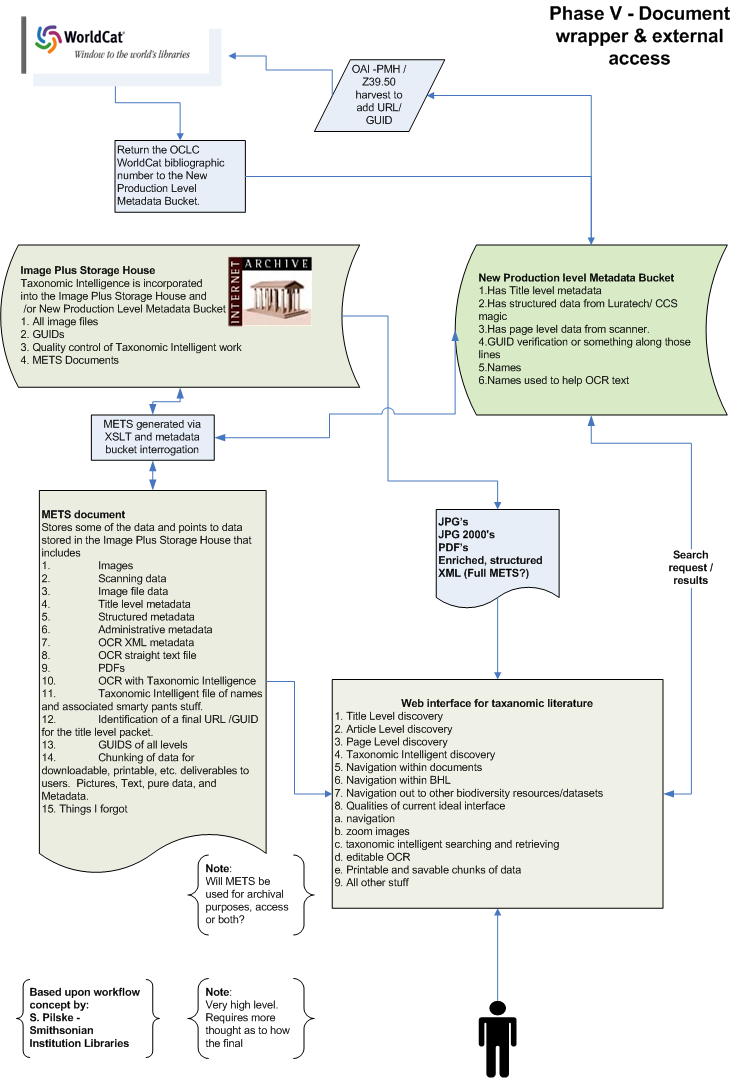

Phase V. Metadata Repository indicates Intention to Scan for all material chosen.

A. The Metadata Bucket that is the dirty beginning mash up could possibly be harvested by OCLC’s Digital Intention Product.

B. The New Production Level Metadata Bucket (also known as the clean bucket) could be harvested by OCLC

1. Put in the final URL/GUID for the title level packet into the corresponding OCLC WorldCat Record

2. Return the OCLC WorldCat bibliographic number to the New Production Level Metadata Bucket.

i. This OCLC number would be the link from a BHL interface to let patrons find “what library has this title” function

ii. The OCLC number could be a link to be used for future metadata matching on Intent to Scan/ Already Scanned for material coming in through a different workflow (alternative partners)

BS@NHM

Ensure whatever chosen interface is used is openURL compliant for external linking

Phase VI. Future users

A. Final Metadata Repository needs to be searchable or interface for batch checking large sets of data to see if the material has been scanned or is intended to be scanned. This is a mash up of the New Production Level Metadata Bucket and the dirty bucket

1. Future partners can batch check titles

2. Future funding requests need statistics

3. Other uses

EC @ NHM:

I would be tempted to place this much earlier on, as BHL partner institutions are going to need a method of avoiding scanning the same material, even if other measures are used to tell them what to scan. As the functionality is so similar, why not combine the two? It just seems more simple to allow non partner institutions to be access the same scanning registry that partners do (and avoid any duplicate functionality) However, having said that, this data certainly needs to be exposed to the public who might want to participate, and to the OCLC’s Digital Intention Product (as below). We’ve put some ideas behind this onto our workflow diagram.

B. Final Metadata Repository needs to connect the pieces shown in the Chris Freeland BHL Interface

1. Title Level discovery

2. Article Level discovery

3. Page Level discovery

4. Taxonomic Intelligent discovery

5. Navigation within documents

6. Navigation within BHL

7. Navigation out to other biodiversity resources/datasets

8. Qualities of current ideal internface

a. navigation

b. zoom images

c. taxonomic intelligent searching and retrieving

d. editable OCR

e. Printable and savable chunks of data

9. All other stuff

C. Final Metadata Repository and Image Plus Storage House needs to be archived

1. Metadata to PREMIS?

2. PDF-a

3. Other

EC @ NHM:

• PREMIS is quite work intensive and mainly describes actions that alter files, something we may not want to do in the short term. I’d be tempted to wait and see how other users fair with it at first. Are we going to record technical metadata on files anyway, using Z39.87 or something similar? The JHOVE software is being used by many digital repository sites at the moment.

• MD5 or similar checksums for all material is a must, and should be incorporated into the metadata (I think premis ) I’m sure the IA can and do produce these. They need to be record.

• As files are whizzing about all over the shop, we will need the security of this kind of verification to ensure files do not get corrupted.

• The BHL’s initial focus to me rightly seems to be on image creation, enrichment and access. As well as housing it all in the Internet Archive, maybe at a later stage we would also want to consider partnering with other institutions, perhaps someone working towards (or who by that time has) Trusted Digital Repository status?

Required Specs

Bucket of Metadata

• Fetchable from scanner at barcoded level

• Records exportable

• Able to record owner of record

• Able to match up with same intellectual units from other owners

• Able to take in that scanner fetched

• Able to take in if scanner rejected

• Fetchable for external system to call on for Intent to scan

• Able to take notes on scanning issues from human input and from machine input

• Able to notify that record has been fetched and is being scanned - blocking other fetches?

• Circulation from stacks to scanner and then from scanner to next location

• Able to take more data in drips and drabs and in big chunks

• Able to export in drips and drabs and in big chunks

• Able to take in new GUIDs if sent

• Able to purge out records in drips and drabs and big chunks

• Takes in alerts from various phases of process

• Log file

Scanner software

• Needs to fetch metadata from Bucket

• Needs to ingest metadata and assign to proper images sequencing

• Needs to interact with bucket to give back GUIDs

• Needs to interact with bucket for circulation issues

• Needs to interact with bucket for hand edits of data and notes filed

• Needs to produce tiffs

• Needs to produce jpegs

• Needs to create file naming

• Needs to deal with multivolume with same title level metadata indicating changes

• Needs to deal with page number and quality control

• Export all files to Image Storage House and New Production Level Metadata Repository

• Log file

Image Store House

• Log file

• Takes images from scanner

• Takes all files created by scanner

• Takes all associated files from bucket

• Alert system to tell that stage is done

Image Plus Store House

• Log file

• Processes images

• Creates OCR

• Creates associated files

• Interprets image files to have structure known and deal with metadata files

• Takes in files as they are created in various steps – Luratech/ CCS Loop and UBio

• Takes in files from external sources if not from traditional scanning set up

• Verifies, Quality Control, etc.

• Delivers files as they are called on by various needs

• Open all files in fetchable way

• Interfaces with whatever Open Content Alliance needs etc.

• Alert system to tell that stages are done

• Deals with GUIDs

• Deals with URL

• Interacts with pointers from METS wrapped deliverable

• Interacts with database structure for deliverables

• Editable by human or machine

• Delivers to public anything at various stages

• Stores everything

• Stores everything in Archival format or delivers it to deep, dark archive?

New Production Level Metadata Bucket

• Log file

• Take in data from scanner

• Take in data from the Image Store House and the Image Plus Store House

• Take in data from OCR

• Take in data from Structured PDF and other sources

• Link to Taxonomic Intelligence

• Link to other features

• Editable human intervention and machine editing

• Harvestable by standard protocols

• Outputs METS with appropriate pointers

• GUID manager

• Supports navigation and deliverables of BHL